Machine Learning Research

Making LLMs Explainable: Google’s Gemma Scope probes how large language models think

Researchers have probed the inner workings of individual layers of large language models. A new tool applies this approach to all layers.

Machine Learning Research

Researchers have probed the inner workings of individual layers of large language models. A new tool applies this approach to all layers.

Machine Learning Research

How often do large language models make up information when they generate text based on a retrieved document? A study evaluated the tendency of popular models to hallucinate while performing retrieval-augmented generation (RAG).

Machine Learning Research

A new model generates tokens faster than current transformers, especially when processing long inputs.

Machine Learning Research

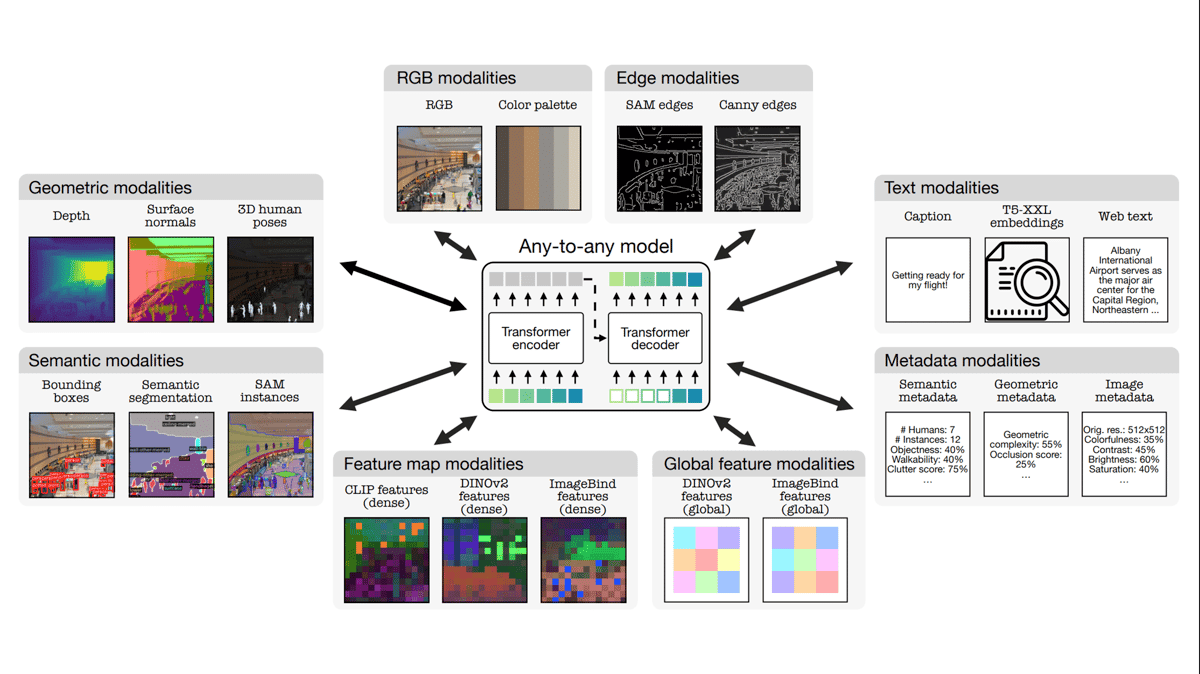

Researchers introduced a model that handles an unprecedented number of input and output types, including many related to performing computer vision tasks.

Generative AI

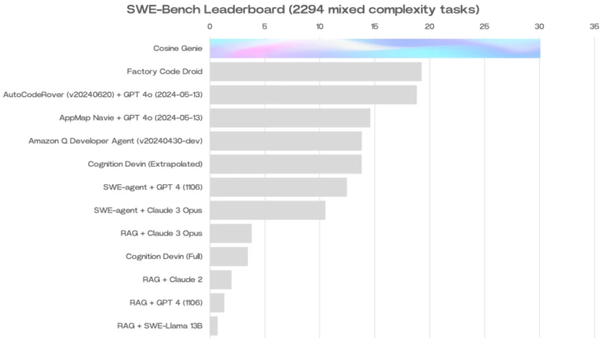

An agentic coding assistant boosted the state of the art in an important benchmark by more than 30 percent.

Machine Learning Research

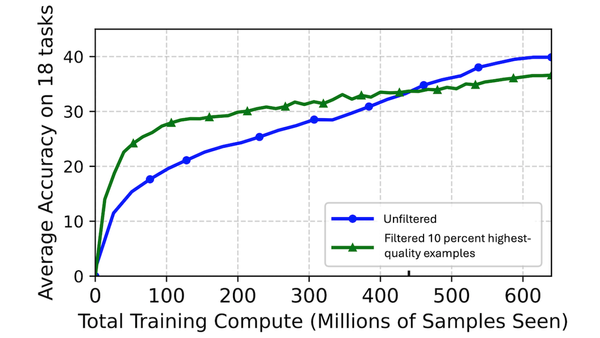

When training vision-language models, developers often remove lower-quality examples from the training set. But keeping only the highest-quality examples may not be ideal, researchers found.

Generative AI

Alibaba followed up its open-weights Qwen2 large language models with specialized variations.

Generative AI

Image generation continued its rapid march forward with a new version of Google’s flagship text-to-image model.

Generative AI

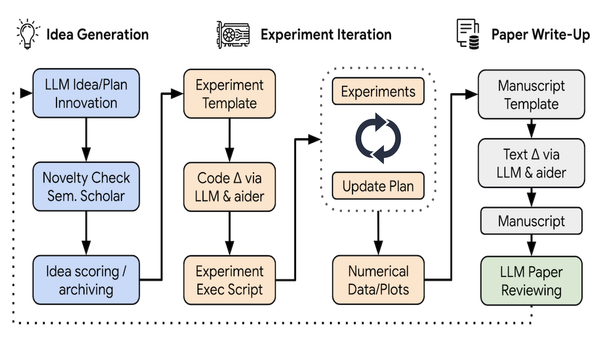

While some observers argue that large language models can’t produce truly original output, new work prompted them to generate novel scientific research.

Machine Learning Research

Literary works are challenging to translate. Their relative length, cultural nuances, idiomatic expressions...

Generative AI

A new company with deep roots in generative AI made an eye-catching debut.

Machine Learning Research

Seemingly an innocuous form of expression, ASCII art opens a new vector for jailbreak attacks on large language models (LLMs), enabling them to generate outputs that their developers tuned them to avoid producing.