Periscope Vision: Researchers used deep learning to see around corners.

Wouldn’t it be great to see around corners? Deep learning researchers are working on it. Researchers developed deep-inverse correlography, a technique that interprets reflected light to reveal objects outside the line of sight.

Wouldn’t it be great to see around corners? Deep learning researchers are working on it.

What’s new: Stanford researcher Christopher Metzler and colleagues at Princeton, Southern Methodist University, and Rice University developed deep-inverse correlography, a technique that interprets reflected light to reveal objects outside the line of sight. The technique can capture submillimeter details from one meter away, making it possible to, say, read a license plate around a corner.

Key insight: Light bouncing off objects retains information about their shape even as it ricochets off walls. The researchers modeled the distortions likely to occur under such conditions and generated a dataset accordingly, enabling them to train a neural network to extract images of objects from their diminished, diffuse, noisy reflections.

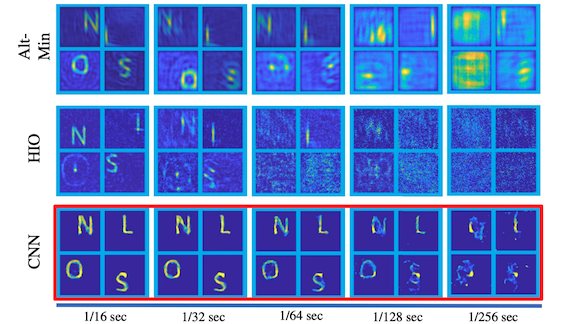

How it works: The experimental setup included an off-the-shelf laser and camera, a rough wall (called a virtual detector), and a U-Net convolutional neural network trained to reconstruct an image from its reflections.

- To train the U-Net, the researchers generated over 1 million images in pairs, one a simple curve (the team deemed natural images infeasible), the other a simulation of the corresponding reflections.

- The researchers shined the laser at a wall. Bouncing off the wall, the light struck an object around the corner. The light caromed off the object, back to the wall, and into the camera.

- The U-Net accepted the camera’s output and disentangled interference patterns in the light waves to reconstruct an image.

Results: The researchers tested the system by spying hidden letters and numbers 1 centimeter tall. Given the current state of non-line-of-sight vision, quantitative results weren’t practical since the camera inevitably fails to capture an unknown amount of detail). Qualitatively, however, the researchers deemed their system’s output a substantial improvement over the previous state of the art. Moreover, the U-Net is thousands of times faster.

Yes, but: Having been trained on simple generated images, the system perceived only simple outlines. Moreover, the simulation on which the model was trained may not correspond to real-world situations closely enough to be generally useful.

Why it matters: Researchers saw around corners.

We’re thinking: The current implementation likely is far from practical applications. But it is a reminder that AI can tease out information from subtle cues that are imperceptible to humans. Here’s another example.